Dynamic Online Tests with Blackboard and R/exams

Online tests and quizzes conducted in learning management systems are a highly useful tool for students to practice course and materials and for teachers to monitor the progress students make while working on their exercises. However, to allow for enough variation and avoid cheating among students, it is often necessary to generate a large number of variations of exercises for such online tests.

In the following it is demonstrated how R/exams and its exams2blackboard() function can be used to create “dynamic” online tests and quizzes as well as large item pools for the learning management system Blackboard. The discussion is based on experiences at the University of Amsterdam, in the Bachelor programme Child Development and Education, where Blackboard testing facilities are used extensively in courses in methods and statistics: Students make formative tests in their preparation of practicals, and teachers use student results to optimize the content of those practicals.

Testing in Blackboard

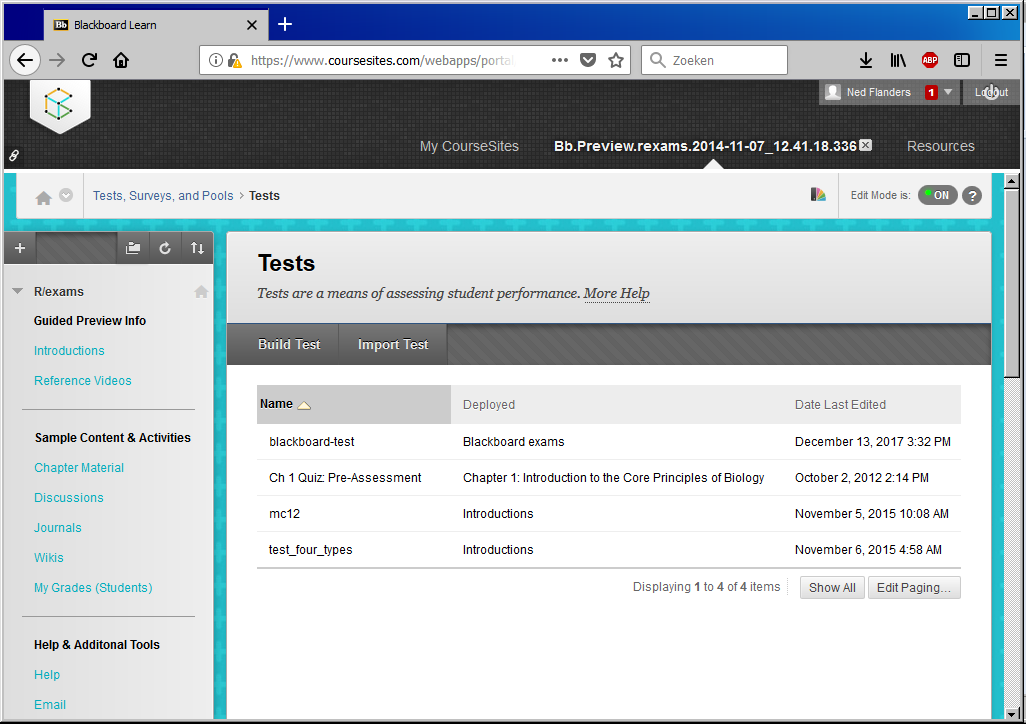

In the Blackboard system, the Tests, Surveys and Pools section can be accessed from the Control Panel under Course Tools.

If an exam created with R/exams is imported into Blackboard, the material will appear both in the ‘Tests’ module and ‘Pools’ module. Tests are sets of questions that make up an exam. Once an exam is created here, it may be deployed within a content folder for administering it to students. Test results appear in the Grade Centre, which is a spreadsheet-like database for storing student results. Pools are sets of questions that can be added to any Test, and are useful for storing questions in the system. So, R/exams may be used to either construct a full exam that can be readily deployed in Blackboard, or to create item pools that can be used as input for exams created within the Blackboard system itself. For documentation by Blackboard on its testing facilities, see the Blackboard Help pages.

Exercise types

In its testing facilities, Blackboard employs the international QTI 1.2 (question & test interoperability) standard. However, as Blackboard created its own special flavor of QTI 1.2, it was not possible to simply use exams2qti12() from R/exams but it was necessary to create a separate function exams2blackboard(). This supports four types of R/exams exercises to be included in the Blackboard testing system:

| R/exams name | Blackboard type |

|---|---|

num |

Calculated numeric |

schoice |

Multiple choice |

mchoice |

Multiple answer |

string |

Fill in the blank |

The R/exams cloze type is part of the QTI 1.2 standard but not officially supported in Blackboard. Hence this type of item is not supported in the current version of exams2blackboard() either as no workaround for implementation in Blackbard has been found (yet).

For an overview of question types in Blackboard’s testing module, see Question types.

Creating a first exam

The first step in creating an exam is to construct its constituting questions; for an introduction into designing exercises using R/exams, see the First Steps tutorial. Next, the exercises are gathered into an exam. To that end, in R, after loading the R/exams package, a list of the exercise file names is created:

library("exams")

myexam <- list(

"tstat.Rnw",

"tstat2.Rnw",

"relfreq.Rnw",

"anova.Rnw",

"boxplots.Rnw"

)Here, we use a collection of one num (tstat), one schoice (tstat2), and three mchoice (relfreq, anova, boxplots) questions, respectively, that are a part of the package. Above, the items have a .Rnw format, but all of the examples are also available in .Rmd format, leading to virtually identical output.

Next, as a first check of the exam and its questions, it is easier to create one HTML version on-the-fly in the browser before importing one or more versions into Blackboard. (Alternatively or additionally, a PDF version can be easily used.)

exams2html(myexam)

exams2pdf(myexam)After a thorough inspection, the exam may be converted to Blackboard format. Let’s start with the simple case of using a single copy per exercise:

set.seed(1234)

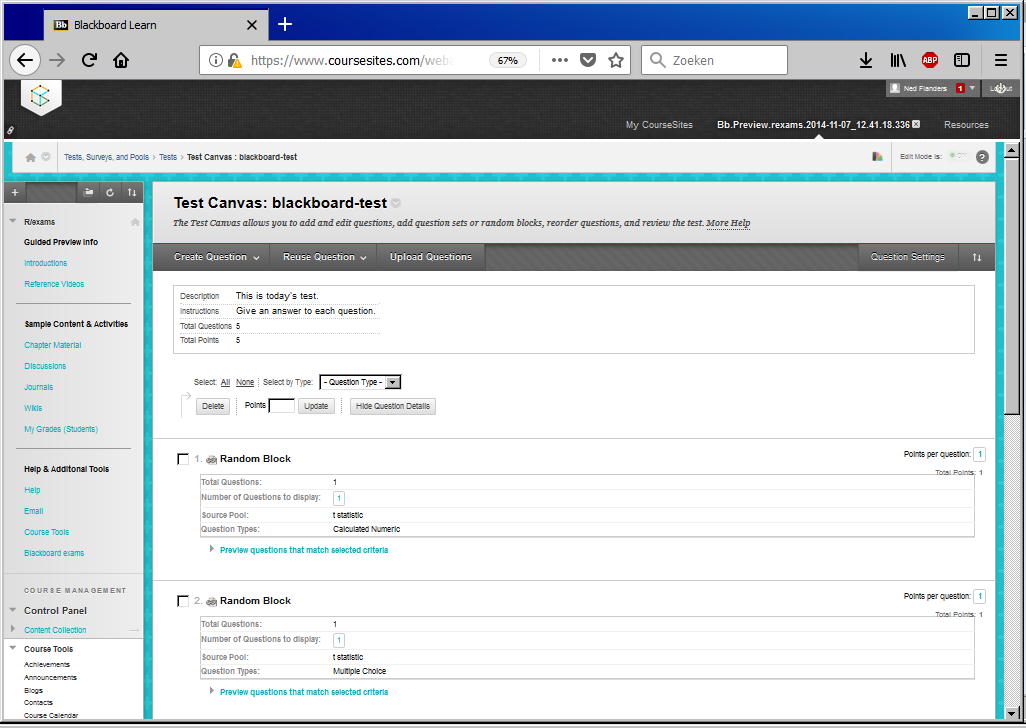

exams2blackboard(myexam)As the five exercises have a dynamic nature (i.e., numbers are drawn randomly), a random seed is set to make the generated exams exactly reproducible for you (or ourselves at some point in the future). In the working directory a zip file named blackboard.zip is created which contains an exam consisting of five item pools, each containing a single copy of an exercise.

Uploading exams into Blackboard

Next, the exam blackboard.zip is imported in Blackboard using Import Test in the Tests menu. Below, it can be seen that importing was successful: It now appears as blackboard-test in the menu along with three other exams.

The exam may be inspected, and exercises may be editted and saved:

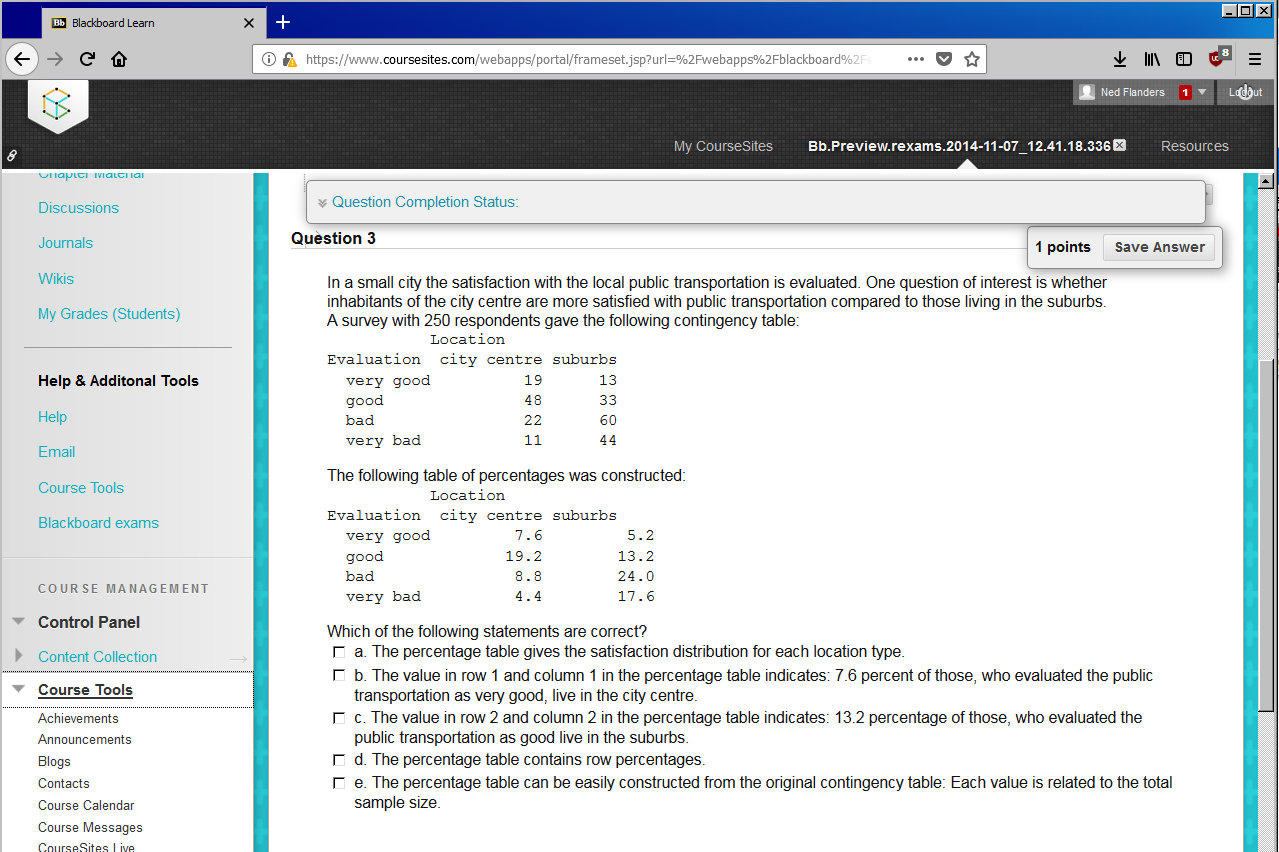

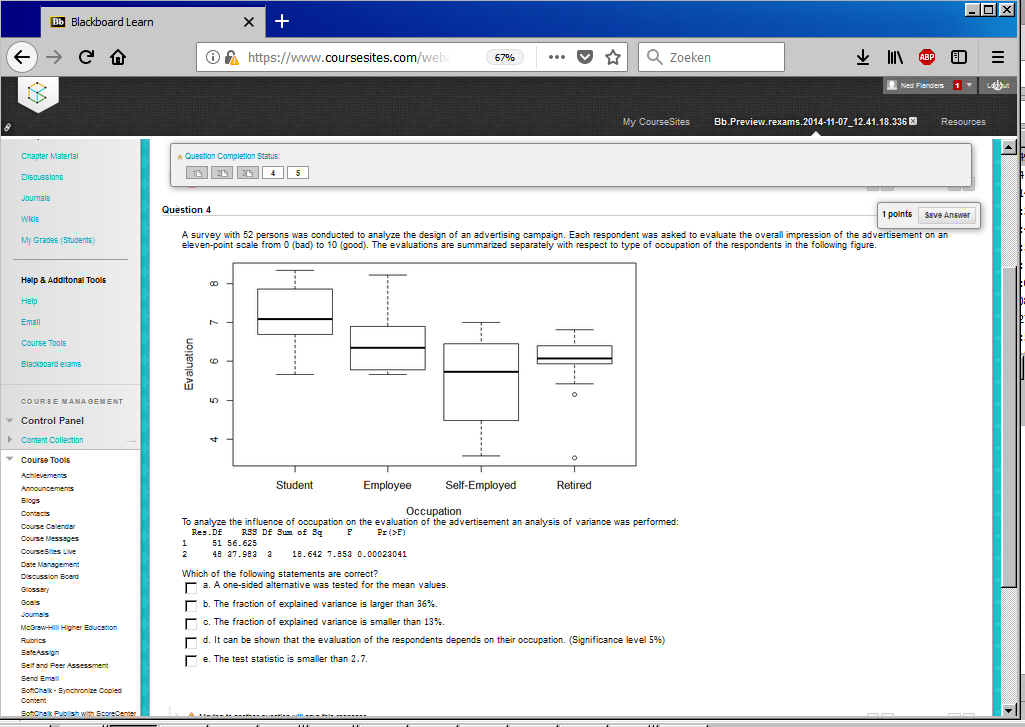

The preview that is available in this menu does not provide an accurate impression of what exercises look like during the actual administration of the test. Therefore, it is advised to activate the exam (for instructors only) and inspect it in an actual administration. Below your see an example of a test administration:

Setting options

Several arguments may be specified to adapt the exam to meet one’s preferences. For example, the name of the zip file may be set, and the number of copies drawn for each exercise may be changed:

set.seed(1234)

exams2blackboard(myexam, n = 3, name = "myexam")This code results in a zip file named myexam.zip in the working directory with three copies for each exercise.

Some more details

Decimal marks

When a non-integer numerical answer is required for num exercises, it is important that the correct decimal mark is used when typing the number in the answer box in Blackboard’s testing environment. The decimal mark depends on the language settings of the individual student in Blackboard. For example, if the language options are either English or Dutch, English requires a point (“.”) as decimal mark and Dutch requires a comma (“,”). For student level language settings, see Personal settings. It is advised that students be extensively informed about which decimal mark to use in their tests, because using an inappropriate decimal mark will lead to an incorrect answer. Alternatively, one may Enforce the Language Pack, forcing students to use a given language (and matching decimal sign). It is stressed that this issue is specific to Blackboard and has nothing to do with how exercises are created with R/exams; it is simply an issue we experienced at the University of Amsterdam while deploying online tests.

Internet browser

By default exams2blackboard() converts mathematical symbols to MathML. Hence, it is advised that Blackboard tests are administered in a web browser that supports MathML such as Mozilla Firefox and Safari. Of these two the former shows the best performance and the latter does a fairly decent job. Therefore students should be instructed to open their tests in either one of them. Note that Chrome does not support MathML.

Alternatively, exams2blackboard(..., converter = "pandoc-mathjax") would allow to embed mathematical formulas for the MathJax plugin that would need to be available in the Blackboard system. As we did not have access to a MathJax-enabled Blackboard system, we were not able to test this option, though.

Evaluation policies

Evaluation of num, schoice, and string item types generated by R/exams is relatively straightforward: Answers can either be correct or wrong. However, for mchoice exercises there is more flexibility: Either all parts of an answer have to be exactly correct or partial credits can be assigned. The exams2blackboard() function allows for specifying evaluation policies and passing them to Blackboard. For more details, see ?exams_eval.

Mix of exercises

If the test constructor develops exercises for the purpose of creating Blackboard item pools (as opposed to exams), it is advised not to mix exercises into one pool, as is done with regression and anova in:

library("exams")

myexam <- list(

"boxplots",

"tstat",

c("regression", "anova"),

"scatterplot",

"relfreq"

)When combining two or more different exercise types, the name and type of the exercise that is first drawn (in the random sampling) will appear as the respective name and type of the item pool in Blackboard, which may lead to administrative issues in the system.

For creating exams, however, mixing is no issue.

Importing Blackboard material into other learning systems

Various other learning management systems allow for importing tests and item pools created in Blackboard. Thus, in principle, exams created using exams2blackboard() maybe uploaded in those systems as well We did some limited trials with Desire2Learn (D2L) and Canvas suggesting that this route for import works. However, more trials and possibly further finetuning may be needed for things to work properly.