Using R/exams for Short Exams during a Statistics Course

Guest post by Ralf B. Schäfer (Universität Koblenz-Landau, Institute for Environmental Sciences).

Background

In many study courses in Germany, exams are written directly after the course period, resulting in a high workload for students within a short period. While we have recommended to students to continuously learn and practice for the exams during the course period, such recommendations were not widely taken up. Therefore, we decided, after discussion with the student representatives, to replace the exam at the end of our course in Applied Statistics for Environmental Scientists by six short exams. The exams were held approximately every third week and were designed to be completed within 15 minutes. Given the resulting increase in exam numbers and workload in a course with almost 100 students, we decided to transition to automated exams.

Exam implementation

After a brief review of available tools, we identified R/exams as matching our requirements for automation, which were the following:

- easy to set up,

- availability of supporting resources such as tutorials and examples,

- usable for paper exams,

- mixed exercises possible (e.g., free text, multiple choice),

- automated creation of randomized exams and exercises,

- automated evaluation of filled exams.

Our implementation was guided by the available supporting resources at https://www.R-exams.org/tutorials/ and https://CRAN.R-project.org/package=exams (vignettes). Indeed, the resources made the transition to automated exams very smooth and we strongly recommend to dig into the resources if you plan to use the package. Noteworthy, the help by the package developer Achim Zeileis on a few minor glitches was incredibly fast and supportive. The main transition work was to recast our questions and exercises into the R/Markdown format employed by R/exams (alternatively you could use R/LaTeX).

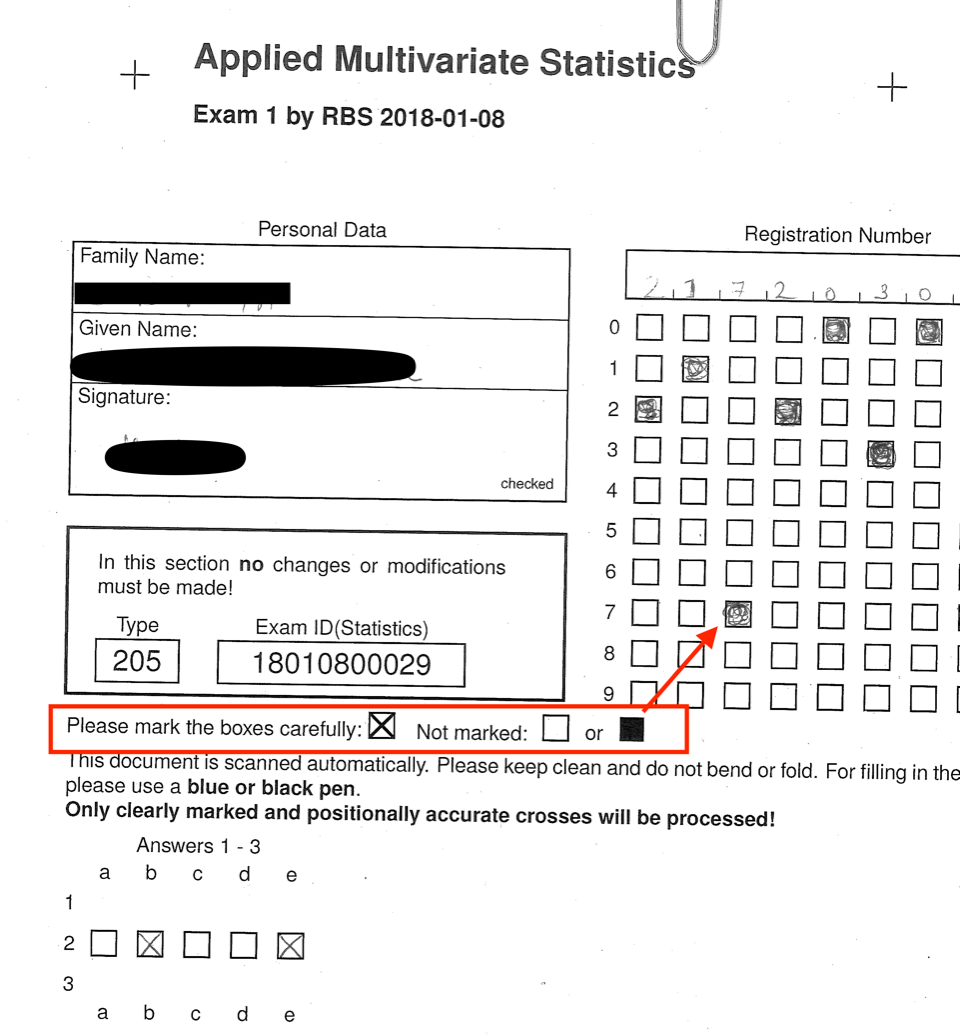

The short written exams were generated by exams2nops using a mix of multiple-choice and numeric exercises (treated as open-ended questions by exams2nops). As an example, the code below creates a PDF (nops1.pdf) from three such exercise templates (1_mch_GLM_LM.Rmd, 1_num_multi.Rmd, 2_mch_PCA.Rmd) along with the institute logo (logo.png). The multiple-choice exercises can be scanned fully automatically while the open-ended question has to be marked manually before being scanned as well.

## load package

library("exams")

## exams2nops

## PDF output in NOPS format (1 file per exam)

## -> for exams that can be printed, scanned, and automatically evaluated in R

## define an exam (= list of exercises)

myexam <- list("1_mch_GLM_LM.Rmd", "1_num_multi.Rmd", "2_mch_PCA.Rmd")

## create PDFs in working directory

set.seed(2018-02-05)

ex1 <- exams2nops(myexam, n = 1, dir = ".", title = "Exam 3 by RBS",

institution = "Applied Multivariate Statistics", date = "2018-02-05",

course = "Statistics", reglength = 9, points = c(4, 2, 2),

showpoints = TRUE, duplex = FALSE, blank = 0,

logo = file.path(getwd(), "logo.png"))

The transition came with several advantages, particularly to have completely randomized exams:

- The answers to multiple choice questions can be shuffled (by setting

exshuffle: TRUEin the metadata of the R/Markdown files, see 1_mch_GLM_LM.Rmd, 2_mch_PCA.Rmd). - The questions included in a single exam can be randomly sampled from a pool of questions by using vectors as list elements when defining the list of exercises/questions.

- Questions relying on data sets can be randomized through resampling (see 1_num_multi.Rmd for an example). The following lines represent the crucial part of the code to generate a random data set:

data("USairpollution", package = "HSAUR2")

nsize <- sample(50:150, 1)

sampled_dat <- sample(1:nrow(USairpollution), nsize, replace = TRUE)

final_data <- USairpollution[sampled_dat, ]

Challenges and outlook

Randomization is particularly interesting because the exams can be re-used and students cannot copy from other exams (at least not as easily). The main challenges in using R/exams were actually due to human fallibility. Despite stated on the exam and explained before the exam, several students completed their exam incorrectly, requiring manual revisiting and correction, e.g., not marking the boxes on the exam sheet correctly:

These were about 5-10% in the first round and less than 5% of exams in subsequent exams. Overall, R/exams saved us many hours of time for correcting exams and we will certainly continue using it and it has attracted attention by other faculty members. In the future, we plan to complement our course by practical exercises in R using the learning management platform OpenOlat. For this, we will benefit from the exams package options to create and export exercises to learning management platforms such as OpenOlat or Moodle.